HIGHLIGHTS:

- Received colorimeter. Tried to use it on monitor, but monitor is too far out of alignment to fix, apparently.

- Backed up project data to archive Blu-Ray disks

- Setup volume storage system on Giles (using MergeFS)

- Installed Seafile on Giles

- Learned how to set up a Samba share (used it to share the volume storage disk (/ark/asw) on Sintel (as /asw).

- Configured Giles, Sintel, and AnansiSpace.com for passwordless access

- Collected / synchronized my Ansible scripts, and created a Bazaar repo for them on Giles

- The CoViD-19 / SARS2 epidemic struck the USA about the middle of this month, and we began quarantine procedures to try to avoid catching it (or transmitting it if we do catch it).

- Lots of organization projects.

Basically, nothing went quite as I planned this month, but I did get a lot

of things done, anyway.

Mar 14, 2020 at 4:01 PM

Volume-Based Storage

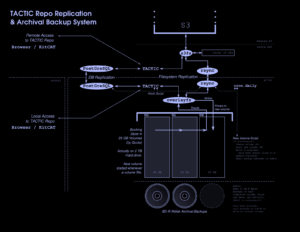

I drew the diagram at the top of this post in an article proposing a data architecture for my TACTIC deployment almost three years ago! Unfortunately, I hadn’t implemented any of it, and wouldn’t for all that time.

I started working on it again this week, and have made some progress on the bottom part of the diagram, which is my “volume storage” or “volstor” concept.

Background

The idea is to make a project data repository which is easy to backup to useful archives which will serve not just for emergency recovery, but also as project documentation and history over time.

To do this, I wanted to break the data up into coarse chunks, chronologically, with each “volume” of a large fixed size, chosen to match the available backup media, which in this case is 25GB single-layer M-Disc BD-R media. I’ve chosen this medium, because it is reliable for long-term shelf storage. It’s essentially to be our “film vault”.

But this is not a convenient structure for everyday work, and I want all the data to be available in a file tree that makes logical sense from a use perspective, rather than from a historical perspective.

So I really need two “views” on the data. One is the underlying storage system, which maps easily to backup media. The other is the user perspective, which maps to a folder shared on our studio LAN (“local area network”).

This will replace an ad hoc collection of folders on my workstation and LAN file-server that I’ve been using to store this kind of information.

It’ll also be the back end for a more sophisticated keyword and tag based access system which will be provided through web applications TACTIC and/or ResourceSpace.

Implementation Progress

This week, I started from the bottom and started working my way upward. Naturally, not everything has gone smoothly.

I started by clearing space on the file server — I had been neglecting this project for so long that I had moved a lot of stuff into the empty disk space I had prepared for it. This has also been an opportunity for archiving project data offline and consolidating and reorganizing other data — as well as just getting rid of trash. But that’s a topic for another day.

Then I started populating my “/vol/asw” workspace and created the base storage volumes there that would serve as the starter for the volume storage. I’ve already got about 75GB of material, including the free music and sound effects archives and existing “Lunatics!” project data I’ve accumulated over the years. Some of this material is already in special archives, so I wrote bash script to restore them to a “base” volume.

After that, the volumes have date-based names: the first is “asw-2020.03.10-2020.03.13”, for example.

And the currently active (writable) volume is just “head”.

My original plan was to use the “OverlayFS” file system for this, mainly because it is incorporated in the Linux Kernel, which made me think it would be more reliable.

However, it turns out not to be suitable for this application after all, for two main reasons. One — the show stopper — is that it can’t handle more than about three layers at a time. So the notion of daisy-chaining volumes, as described in the diagram above, doesn’t work at all. The other, which I only discovered because of the first problem, is that the daisy-chain approach is really complicated and involves “phantom mount points” and “work directories” that I have to create in the process of adding volumes. I had planned to write software to manage this, but it was pretty confusing.

In fact, I only discovered this today! What a letdown!

But I then did some research, and was, after surprisingly little research, able to find an alternative, “MergerFS”, that is actually both more appropriate and more reliable for this task.

It’s a FUSE-based solution, and it’s not a speed-demon, to be sure, but it gets the job done well enough for my purposes (remember this is essentially a data-storage drive, not an active working directory — so access speed and particularly latency requirements are very forgiving).

What’s even nicer, though, is that with MergerFS, I don’t have to bother with this daisy-chaining model, or the complex configuration requirements it would take. Instead, I simple give it a “:” separated list of directories I want merged, and it does it. So much simpler!

Sharing the Volume

For filesystem access, I simply provide my merged filesystem as a network drive for my workstation.

When I was still using OverlayFS, I found that NFS simply wouldn’t work with the OverlayFS back end on the server. The reasons are technical, and there have been some changes in recent Linux kernels, but they still didn’t allow for what I was attempting.

So after looking into alternatives, I settled on learning Samba and setting up this volume as a “Samba Share”.

As you may have picked up from my previous posts, I am not a “Windows guy” and all of our computers run some Linux O/S (mostly Debian 9 and Ubuntu Studio 18.04, currently). So I had never had much need for Samba, and had never learned it. It’s really complicated compared to just using NFS, I must say.

However, I managed, with a lot documentation help and examples, to come up with a suitable solution — a single-user approach, with the share owned by my user on my workstation, but forced to a common user on the server. It has not actually come up, but it should be possible to map other people’s users to the same user on the file server, so as to avoid permissions clashes on shared folders. Not good for all purposes, but adequate for this one.

I have not gone back to test whether MergerFS can work with NFS or not. I suspect not — that any FUSE mount would fail. But I haven’t tried.

However, I can verify that MergerFS works with Samba, and so I believe I’ve got my “volume storage” system working.

I do need to create some management scripts for maintenance and documentation.

But the next step is to start building the application stack on the server, using this volume storage as the data repository. I’ll post separately about that.

Mar 16, 2020 at 4:01 PM

Colorimeter Attempt

We’ve been noticing my monitor looks awfully blue, lately (not as blue as it looks to the camera in the video above, but still, bluer than it should be). This isn’t really that disturbing when I’m just using it, but since this is what I’m looking at when I’m setting up materials in Blender, the colors are a bit off.

There’s a particularly sensitive “indigo” color that I use quite a bit in “Lunatics!”, but when I get that color right on this monitor, the result actually looks kind of “plum” or even “pink” on a properly-calibrated monitor, which is obviously kind of frustrating.

The professional way to solve this, of course, is to color calibrate the monitor. Ideally, I would be able to see the NTSC color gamut as it is intended to look on screen.

To this end, I purchased an X-Rite ColorMunki Display colorimeter and used it with DisplayCAL, which is a standard package in Ubuntu Studio.

The software and the colorimeter appear to have worked very well together.

Unfortunately, what they told me is that my monitor is hopeless — even after adjusting it as far as it can go, the result is still too blue for the calibration to come up with correct colors. When I tried to just force it to get ahead and do the best it could, I wound up with a very incomplete color gamut and it actually looked a lot worse.

Not quite sure what I’m going to do about this. The obvious thing would be to get a real computer monitor with a wide gamut that can be properly calibrated. I may yet do that, but a direct replacement for this monitor is about a $600 expense if I decide to do that. It’s a lot of money.

Alternatively, I could try to calibrate one the monitor on one of the other computers we have to use for just for color setting.

In fact, this monitor isn’t at all unpleasant to use, it just doesn’t quite get the colors right, and so the result will not be as intended. If I do replace it. I’ll probably repurpose this old monitor for something I don’t need precise color on.

Mar 23, 2020 at 4:00 PM

All Backed-Up

This project has been going on for almost ten years, now, all told (I can hardly believe that, but it’s true), and the data produced has gotten pretty baroque over the years, with lots of ad hoc storage solutions and directory structures. I’m starting to untangle this, but of course, there’s always a risk of losing something in the process, and we’ve also got very inconsistent backups.

So at the beginning of this month (March 2020), I started backing the entire Lunatics! directory tree to 25GB BD-R (blu-ray) media. These are not the Millenniata M-Disc media, but just regular dye-media which is cheaper, and quite adequate for storing the data near-term. My plan is to back everything up to archival M-Disc media after I make the big reorganization.

But I just wanted to get everything stored for now.

It took 7 discs (so about 225 GB of data) to get it all.

No doubt there is some redundancy and unnecessary stuff included, but I didn’t want to take a chance on deleting something I’d want later. So I just shoveled everything on there.

It’s not that disorganized. I broke the project data up into “Production”, “Business”, and “Tech” categories, with further subdivisions in each. The “Production” folder (the largest) has all the stuff related to producing the show: the actual source tree, reference data, imported data, and so on. The “Business” folder has legal and financial documents, marketing, websites and server installation notes, and so on. And “Tech” has software distributions, how-tos, technical notes, and so on for a wide variety of packages, plugins, and add-ons.

Mar 24, 2020 at 4:01 PM

Spring Cleaning!

Things are looking a lot nicer around here, after I finally took some time to clean up our studio library area for an interview earlier this month.

As I write this, towards the end of March, of course, I’m really glad I did this, because we are in the midst of “social distancing” and “self-isolation” because of the Coronavirus/SARS2 outbreak all across the USA. I’m not sure how long we’re going to be doing this, but I’m going to be spending a lot of time in this studio.

I’m hoping this will translate to high productivity on “Lunatics!”

I’m already started on installing a lot of studio software on our LAN file-server (you can actually see the front of it on the right side of the second picture above — I have a little 7-inch console monitor mounted on the front of it).

Mar 25, 2020 at 4:01 PM

Project Sound Archive

As part of my data migration and backup plan, I’ve now burned all the music and sound effects tracks I’ve collected for use on “Lunatics!” and related studio projects to M-Disc BD-R for long-term offline storage.

I’m also incorporating these into a DAMS for easier access in the future, but I wanted to make sure I had a good archival backup as well.

Unfortunately, as time passes, some of these tracks will disappear from the Internet, and so it’s important to have my own copy.

These are the fruit of a long time project to listen to free-licensed tracks and collect the ones I felt were usable for our project, so it is a kind of curated collection.

In a few cases, the the original artists will have removed the track or changed the license it is offered under to a non-free license. They are of course, within their rights to do that, although the Creative Commons licenses themselves are non-revocable, so my previously-downloaded copy is still legally under the license offered at the time I downloaded it.

That sounds fine in principle — but there is a catch: it’s pretty much my word against theirs if I’m ever challenged on this. And that’s kind of sad. The best I can do for proof, really, is to screen capture the website or find it in the Internet Archive’s historical “Way Back Machine” database. I’ve long thought this might be an application for using a blockchain-based solution to certify public license, but I don’t have anything like that set up yet.

Mar 30, 2020 at 4:01 PM

Seafile Cloud Storage in the Studio

I’ve been installing software on our on-site studio fileserver. This is both to use locally and a testbed for software I might put on our project server.

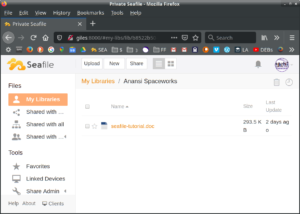

After a somewhat frustrating development process, I now have an Ansible role for installing Seafile, which is an open-source self-hosted alternative to services like Google Drive or Dropbox.

With it, I can upload data from Android or Apple devices (e.g. smartphones) to the local server and then transfer them to my workstation. This has two main advantages over using “software as a service” solutions like Google Drive:

- 1 Our data stays private (no third parties handling it).

- 2 It’s much faster, since it goes at the full speed of our LAN internet, rather than going out over ADSL to get to an outside server and back

I’ve attached the Ansible “seafile” role main task and the systemd templates I used here, if you want to look at them. The server specifics are held in a “group_vars” file, which contains a “seafile_sites” list (theoretically one might be able to install more than one instance of the program with this, though I haven’t tested if that really works).

You also need to download a copy of the program and put the archive into the ‘files’ folder. I actually got a copy of Seafile 6.3.4, because it seemed to work better with the Debian 9 system I have installed on our local server. I probably ought to consider upgrading to Debian 10!

I’m still pretty new to systemd initialization — I’m used to the old System V approach. But I was able to get these service files working.