I’ve been considering how we want to set up our virtual-studio asset-management system. There are a number of problems I’ve wanted to solve to plan for the future:

- The source archive is already around 15 GB. This is the original art, Blender models, sound effects, and some rendered video.

- There’s another 47 GB in the full music sources archive (I’ve previously not considered it practical to put this online, but I really would like to have it in the DAMS, so I can start adding metadata). This is essentially a collection of vetted, free-licensed music albums and tracks for potential use in our soundtrack. I’ve been drawing from this collection for our music and sound design. Quite a lot of work has gone into collecting this, but the metadata is very limited (just file and directory names containng source archive, license, author, album, and track names). I would like to make this into a better-organized DAMS with additional metadata about mood, genre, character and theme associations, and other details that I’ve just been keeping in my head (and now the archive is way too big for me to hang onto all that, to say nothing of communicating it to others).

- I want to keep permanent archives of the assets on Mdisk Blu-Ray Recordable media. These are 25 GB volumes, using the Mdisk technology which is much more durable than dye-based optical media (comparable to pressed optical media like commercial DVD & Blu-Ray disks). But as this will already take three BD-RM volumes, it’s clearly going to be necessary to have a multi-volume backup system in place for that.

- Keeping the definitive repository on our local, on-site computer is impractical, because remote users wouldn’t be able to access it, defeating most of the point of using a DAMS for Internet collaboration on our project.

- Keeping the repo on the public server is very inconvenient for me and for rendering, since both my workstation and the render cluster are located here on site. Our bandwidth is pretty good consumer-grade DSL, especially for a rural site, at 12 mbps, but not anything like a data center! Our connection is also asymmetric, with much-slower upload than download. This would mean that every time I did anything with the assets, and every time the render manager synced files for rendering, massive amounts of data would have to be downloaded and then uploaded to the server. It’s pretty silly to do that when we have most of the same data sitting on a hard drive right here.

- Also, even if we wanted to keep the data on our web server, we couldn’t afford to. We’re already paying an extra $20 a month for about 30 GB of extra storage to accommodate the existing Subversion repo ($44/mo instead of $24/mo). Clearly we need to move that to cheaper hosting, such as Amazon S3 (or a compatible competitor).

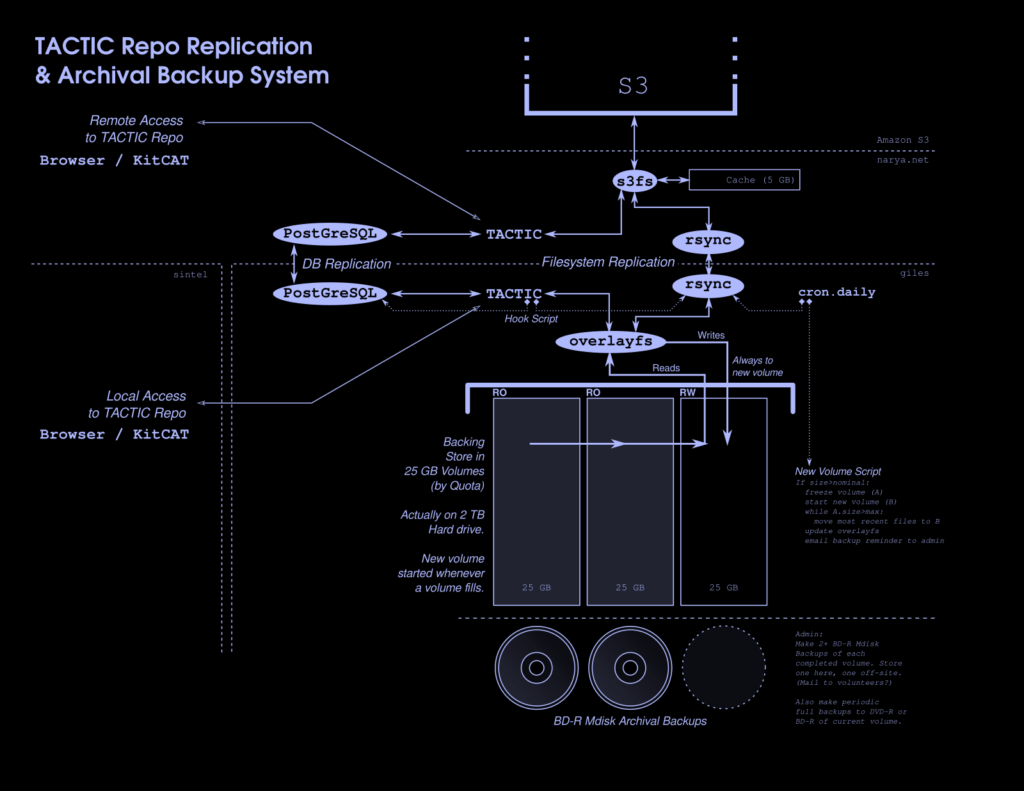

Fortunately, there is a way we can have our cake and eat it too, because TACTIC supports replication for load-balancing. Ours is a rather special use-case, but the same technique should work. TACTIC keeps control data in a PostgreSQL database and stores assets as files on the file system. As far as I know, it’s relatively undemanding on the file system, simply serving and storing whole files (this could be a trouble spot if I’m wrong about that).

So the first obvious thing is to install an S3-backed virtual file system on the public server. This means the asset archive can actually be in Amazon S3 storage, which is very cheap — running a few scenarios through Amazon’s price calculator, I find that our current archive would cost maybe $0.50/mo to host, and an archive of 1 TB or so could be hosted for less than what we are currently paying for 30GB. This is obviously a big improvement!

There is such a virtual file system, called “s3fs“, which is based on FUSE, and already in the Debian archive, so installing this is trivial.

The second part is to set TACTIC up for replication, with just two replicas — one running on our local server and one running on the public one. It would be possible to create additional site replicas if we had another node where a lot of people were working, but currently, I don’t see the need for this as other collaborators are working from individual home sites and typically don’t need to access much of the archive at a time.

This is fairly complicated, but also pretty well documented: PostgreSQL supports database replication and rsync can be used to keep two file systems in sync (a very common use case for administrators). TACTIC replication primarily consists of replicating these two types of data stores, with some minor configuration of each replica, which is included in the admin documentation for TACTIC (although the manual assumes you’re doing this for load-balancing in a data center, rather than bridging a slow link, which is our case).

That leaves the multi-volume backup problem to consider.

What I want to do is to actually keep the assets physically stored in convenient volume-size directories on the local server, with each backed up by a BD-RM disk when it fills up (and then made read-only so it stays in sync). Later information would then go onto a new volume. Also, we’d back up the TACTIC metadata database into the headroom over the primary data on the backup (i.e. we’d leave some space on each volume for a full-backup of the much-smaller control data from TACTIC at the time the volume was frozen). This means that all volumes up to that volume could be used to create a completely-consistent TACTIC snapshot of the DAMS at the time the last volume was frozen. If for some reason one of the earlier volumes were missing, the remaining volumes could create a DAMS with all of the backed-up assets on the available volumes.

To avoid keeping lots of copies of this same data, the volume directories could then be mounted using the overlayfs system which is a virtual file system supported in Linux kernels after 3.18 (we’ll be running 4.9+ which is standard in the Debian 9 distro we’ll be using on servers after upgrades).

Putting all of that together, I came up with this plan:

We are probably NOT going to roll this out immediately — my first goal will be to get a single TACTIC instance running on the public server. But it’s good to know where we’re going so I can plan ahead.