HIGHLIGHTS:

A lot of personal and household work this month, as well as some catch-up on documentation and worklogs. Experimented with the new generation of AI art engines — both getting renders from prompts, and figure out ways to make use of the output.

I have been getting more experience using the software I have installed, especially Git and Gitea.

LUNATICS PRODUCTION:

Separated the PLSS pack from the Aquarium Room set and started working on some generic detailing.

I also designed a “Gaming Pad” (like a whole-desk mousepad), based on the episode-1 cover art. This was largely because my mousepad is shot, and I thought I might get a replacement. But also because it would be a prototype for possible merch.

EXPERIMENTAL PRODUCTION:

I finally caved and got an account on the MidJourney AI art platform to see what I could do with it. I’ve made a lot of experimental art with it, and also found some potential practical applications for: backdrops, billboards, computer screen textures, complex textures, and even simple props.

FILM-FREEDOM PRODUCTION:

Started a new series of “Log Extract” videos, which are a derivative from my worklogs. I just extract interesting projects, add appropriate music, and do more careful editing for public release. They have no voice-over, but just use intertitles and overlays to explain what’s going on. And for the two I have completed, at least, I edited to match the music length, so it’s basically like a low-key music video, too, showcasing free-culture music.

IT WORK:

Finally got around to repairing/upgrading Monolith for Nicholas.

VIRTUAL STUDIO:

I’m getting some practice using Git and Gitea, now. I’ve switched over to using the Git repos as the primary project VCS now.

I’ve started documenting the site. I collected notes and started some documentation on how I migrated to Git and set up the Gitea server to handle production data (which requires managing large binaries blobs).

FEDIVERSE PROMOTION:

I’m continuing to grow my following on Mastodon. I seem to have knack for this. It’s just striking up conversations, talking to people, and posting about my various art and technical projects. This still seems like my best bet for promoting “Lunatics!” and related projects.

WORKLOGS:

I’d gotten pretty far behind on the topical and monthly worklog summaries, so I spent some time getting the ones for May & June finished.

GROUNDS:

I fixed the bent blade on the lawn mower, and started catching up on all the mowing.

It has been extremely hot, so I’ve been trying to do all that work at dusk and dawn.

Realizing this is going to be an ongoing problem, I purchased a set of halogen work lights to use to do outdoor work at night when it’s cool.

A Little MidJourney Side Trip…

Logically, I should probably be documenting my Gitea installation or maybe starting back up on assets I need to finish episode 1. But my social media feeds have been full of a new wave of AI-generative art that many people I know are playing with.

I finally got sucked in and signed up for a basic membership on MidJourney, so I can experiment with it, and I’ve spent the last two days feeding it prompts and seeing what I get back. How hard can I push it?

The lead image here is NOT exactly from MidJourney, it’s a combination of two MidJourney prompts. This one with the caterpillar creature and the prototype for the planet in the sky:

…and this one of an “indigo jungle at sunrise”:

It can be a little hard to steer the AI where you want it to go — I actually asked for the indigo jungle to appear in the shot with the caterpillar, but I couldn’t make it happen in one render. MidJourney sort of drops things if it can’t figure out how to make it work.

But of course, the artistic way to use these AI tools is to use them along with all the rest of your toolkit.

Legal and Ethical Issues

A number of philosophical issues arise with this kind of AI-generative art. Of course it is really trained on a whole lot of art and images that the company acquired from Internet sources, and there’s not any real detail available to users on where those images came from.

Are they engaging in some sort of copyright infringement by doing that? Or does it fall under “fair use” — perhaps because it is sufficiently “transformative”?

There are people arguing both sides of that. It’s very similar to the issue with GitHub and the “Copilot” code generator, which is similar in concept, if not in application.

And then there’s my contribution, which seems pretty minimal. I wrote some text and a minute or so later got these pictures. It’s actually a pretty big rush — especially when it works. But did I create these images? Or just ask for them to be created? Do I have the right to claim authorship of them?

Well, I don’t think I can solidly answer these questions here — just acknowledge them.

The MidJourney terms of service say that I have “full commercial rights” to these works (because I’m a member — when I was using the trial, they claimed they were under a CC By-NC license from them).

And that brings me to the ethical or mission question of “is this okay to use on my Open Movie project”?

Well, it’s not properly a “free-software tool”, I have to admit. Although the issue isn’t really the tool. Free-licensed AI software libraries certainly exist. But what makes MidJourney powerful is the training. It’s very much like Google’s search engine, which is not powerful so much because of the software (much of which is (or was) open-source, I understand), but because of the effort put into indexing. It isn’t that hard to write a search engine — open source search engines exist. But building up the data to generate good search responses is hard.

However, I think this falls under the same general “found art” category as images on Flickr or Pixabay, or the samples used in synthesized music. I’m actually pretty comfortable with the idea of just incorporating some of this output as images — which I’m free to release under the same CC By-SA 4.0 license as the rest of our sources (legally, I could probably use any license, ethically I feel it’s only right to use free public licenses for this work).

But What’s It Actually Good For?

After spending some time on purely creative and fun prompts, I started asking myself whether I could make MidJourney “sing for its supper”: can I get back more than the $10 a month worth of value that I’m now putting into it, by creating useful assets with it?

After some experimentation and some reflection on applications, I decided that the most promising areas were “background” filler roles, such as:

- Backdrops

- Greebles

- Textures

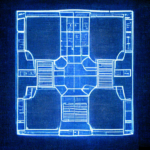

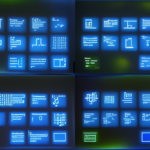

- Computer/Display Screens

- Set Dressing (Billboards?)

These are all areas which can be very time-consuming to generate by hand, but which don’t really require a lot of discipline in design. Nor do they need to be strikingly original. The default “cliche shelf” output that you tend to get from an AI will be just fine in these areas.

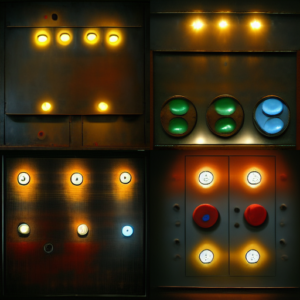

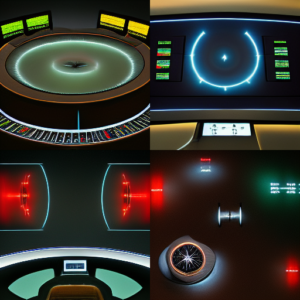

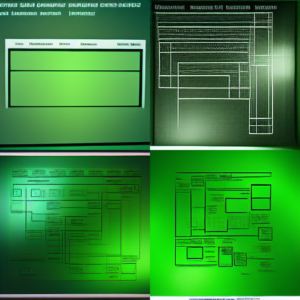

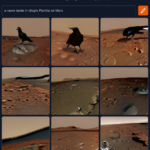

Here’s some computer screens, for example:

This sort of stuff is similar to all the monitor screens I created for the “Mission Control Room” set. I used a variety of techniques to generate those, ranging from simple or complex drawings in Inkscape to screen captures off of my own monitor.

These AI-generated screens would be entirely adequate for such purposes — at least the ones that the audience isn’t supposed to be able to read. I’m not reworking that set, but there will be many others.

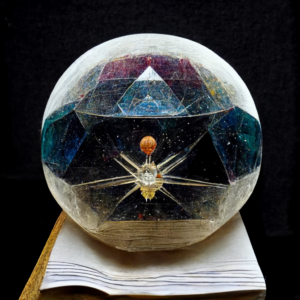

Small props that might appear (either as small flat billboards or as textures mapped onto simple 3D models) as set dressing are possible:

I could use these as a guide to create simple 3D objects and even map the image as a texture onto a simple 3D sphere model — it would break down if you move too far to the side, but it could work over a fairly wide angle, if I know we’re not likely to get too close to it.

I might demonstrate this experimentally with one of these as a warm-up Blender project.

And it can generate some interesting textures:

And there are texture images that could be used essentially as greebles:

It wouldn’t hold up in the foreground, of course, but way in the back of a shot, it could certainly help to sell the scene without having to spend ridiculous amounts of time modeling things that will barely be visible on screen.

Backdrops

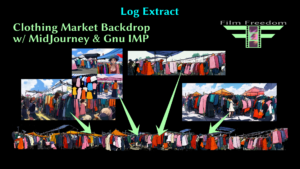

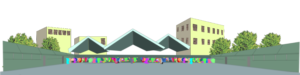

So far, it’s pretty theoretical, and nothing it has produced is superior to the assets I’ve already created by hand (except that it didn’t take as long), but then I tried creating some of the backdrops, and realized these are better than what I’m using now. For example, in the background of the open-market shots in Baikonur, I have a bunch of “clothing racks” that are really just splotches of color I put together quickly in Inkscape:

I used several billboards like this, and then a large backdrop in the very back of the shot, with some architectural elements:

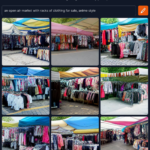

But let’s see what MidJourney can do for me, with a prompt like “an open-air market with racks of clothing for sale, anime style”:

This is pretty impressive, and with a little tweaking in Gimp, this is very usable. I would need to take out the sky and creatively trim the edges, using several panels like this (which are easily generated!) to extend the width and layer the elements, as I did the with the original billboards.

Sometimes MidJourney gets a little more creative than I expect it to. One of the renders for this prompt invented an anime character in the foreground of the shot (no doubt triggered by the “anime style” prompt):

Hello there! She looks a little run down. I fear MidJourney might be exposing its received biases about who shops in open air markets. Such issues come with the territory. AI generators base their output on their training sets, and the training sets reflect human biases and sometimes uncomfortable realities.

In fact, I have already rendered the shots from the open market in Baikonur, so it might be frivolous to do this work now — but I’m honestly tempted to try reworking that shot. I wasn’t thoroughly happy with the way the backdrop and billboards were working:

Well… I probably won’t do it until I’ve got the other shots finished, but I’ll keep it in mind.

There are some other shots, including some unfinished ones, or ones already in need of rework, where other backdrops might be better than the ones I’m using.

I have a very simple backdrop for the distant plain and mountains seen in the train sequence at the beginning and some more at the spaceport. It wouldn’t be too hard to slip in replacements, and MidJourney can do some nice things “steppe grassland with mountains in the distance”:

It isn’t perfect. The grass is too green, and it’s hard to tell whether those are clouds or snow-capped peaks in the very back (to be fair, it’s sometimes hard to tell that in real life). But a little quick work in Gimp can fade the grass and provide a fuzzy clip-line for the mountain peaks. It can also be used to stitch several similar panels into a very long horizon-backdrop.

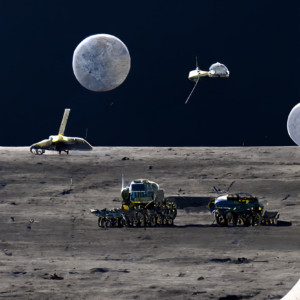

Unfortunately, I don’t think MidJourney will be much use for the Moon. My attempts to create similar backdrops of the lunar environment have been disasters. I think there must not be enough NASA photos in MidJourney’s training set! It acts like it has seen more cartoon moons than real ones — and it keeps wanting to put the moon in the sky, too. Sometimes multiple times:

That last one (actually one of the earliest I created) is probably the best. Weirdly, it did a lot better creating the terrain when I asked for the spaceships, but I would actually need to generate the terrain without them. I suppose I could creatively paint them out, but that starts to get to be as hard as the techniques I was using before (which started with NASA photos).

Random Weirdness

I’ll sign off with a little gallery of some my purely-weird unrelated results, from when I was just playing with it, with no particular goals.

Alien creature writing his memoirs in his forest residence:

A sci-fi engine room:

A stone megalith of a wolf overlooks a field of flowers:

Some astronaut portraits (I was actually trying to see if maybe it could do better for the wall portraits I actually used in the back of the press conference scene, but I don’t think so):

Some sci-fi control panels. Seems pretty “trekky” to me:

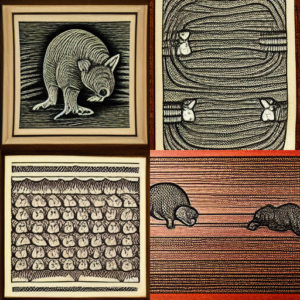

Some whimsical results for “a wombat wielding wires in wonderland”, which I wrote purely for the alliteration:

Another engine room:

I think I may have found another tool for my toolkit! But it’s clear that I’ll still be making a lot of my assets the old-fashioned way.

MidJourney Experiments Follow-Up

This month, I did some experiments with the MidJourney prompt-to-art AI generator, to see what artistic and production uses they might be put to. I wrote about this in my previous post.

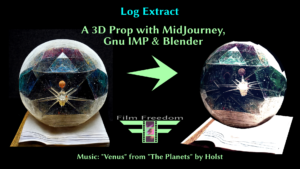

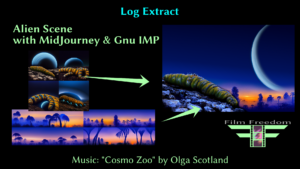

Since I take screencast logs while I’m working, I had records of my process, so I made “Log Extracts”. This is a new video category I’m doing for the “Film Freedom Channel” on our new PeerTube site, though I’ve done a few similar ones before.

There are two new videos up. One is about the composited “Alien Caterpillar” image I used for the last update. This video follows the process of generating the prompt images and then compositing the image in Gimp, set to “Cosmo Zoo”, by Olga Scotland (which is drawn from my “Soundtrack Library” collection of free-licensed music — I downloaded this years ago from Jamendo, so I’m not sure if it’s even still published there, but it might be).

https://tv.filmfreedom.net/w/6hAq8cpTYBtBuMhzghpGyM

The other video was a bit more of a challenge. I used a “prop-like” render from MidJourney, and made an actual 3D prop out of it in Blender, by creating a simple shape, and then mapping the imagery onto it (after some processing in Gnu IMP). I really liked how this came out — it seems like a very viable workflow:

https://tv.filmfreedom.net/w/g13qAmKxqRAU7skBY9CxLh

I began a third video, based on the backdrop for the open market, but after working with the logs a bit, I realized it felt incomplete without showing it being used to make a set. So I’ll probably finish that one later, when I actually do that part of it.

Another Log Extract Should be Coming — Perhaps Next Month?

This is a new video series that I’ve started, as a low-impact production to make use of my daily screencasts. These each took about a day to edit. There’s no voice-over. Just intertitles to explain what’s going on, combined with the music. And in both cases so far, I revised the video to match the length of the music, rather than fitting the music to the video length, as you can also think of them as music videos. I’ve found that many people find music without voice a bit more relaxing, so that’s the idea I have here (it also means I don’t have to record voiceovers).

I also did start on detailing the PLSS pack for the “Suiting Up” scene in “Lunatics!”, last week. Felt good to get back to production, but it’s not really finished enough for a whole post about it. Maybe after I add the control panel.

Photos from July

Packed up the large monitor to see if I can get it repaired or replaced. Set up the smaller monitor as a single display. Fixed the broken door handle on the front of the studio.

|

|

|

|

|

|

|

A very nice sunset, while mowing at dusk to avoid the heat.

More AI Art Renders from July

I did a lot of MidJourney renders! I wanted to figure out what it was capable of, and whether it’d be any practical use.

The first day was very experimental.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The next day, I got a little more targeted, trying to get practical results. Also discovered how to control the aspect ratio.

|

|

|

|

|

|

|

Experiments with creating textures:

|

|

|

|

|

|

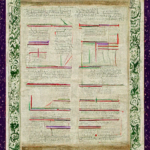

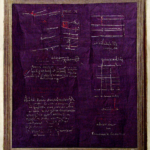

Page textures and “greeble” detailing:

|

|

|

|

Video/computer screen textures:

|

|

|

|

|

|

|

Landscapes, revisiting the “indigo jungle” and “fir forest” prompts:

|

|

|

|

I felt like I hit “pay dirt” with the “clothing market” and “distant mountains” prompts. These produced assets I could probably make use of:

|

|

|

|

|

|

|

|

|

|

|

|

More generic exploration:

|

|

|

|

|

|

I slowed down a little after the first couple of days.

I thought maybe I might find another win like I did with the clothing market, with parking lots or airports, but the results were not as satisfying:

|

|

|

|

|

And I went round and round trying to get something like a realistic Moon surface horizon. One insight was: “Don’t say ‘Moon’!” Of course, it kind of makes sense, if you said “Earth” it’s going to try to draw the whole Earth, not a typical field in the prairie. So I tried various ways to describe the lunar surface:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

I tried a few variations looking for impressions of a “space port”.

|

|

|

|

|

|

|

Ran a little low on ideas.

I was getting low on the allowed queries on my “basic” MJ account, so in the middle of the month, I did some tests with a different AI art generator, Craiyon. Craiyon doesn’t have the same nice terms as MidJourney and doesn’t generate the high-resolution images, but it is free to use, and provides a nice way to explore the technology.

Of course, I just tried some general prompts to see what it could do:

|

|

|

|

But a major thing I tried was to give Craiyon the same (or similar) prompts that I had given MidJourney, to see how the results would differ.

|

|

|

|

|

|

|

|

|

It did a little better with the moonscape prompts.

|

|

|

|

|

I tried some other space themes:

|

|

|

|

|

|

And I discovered that Craiyon really doesn’t have a very good concept of numbers, which is kind of ironic for a computer. But asking for a specific number of monkeys at typewriters doesn’t work, and vague number terms didn’t work much better:

|

|

|

I’d seen a number of cool posts with “Cyberpunk” prompts, which got me started making some stylized skylines:

|

|

|

|

|

|

|

|

|

|

|

|

|

Afterwards, I found I still had a little time on MidJourney (I think they added some for some reason), and so I tried a similar prompt to get a surprisingly similar result:

If I do a Dallas digital art scene flyer or something, this might be my cover art!

I made a number of pop-cultural reference tests to see which characters Craiyon would “recognize”. I accidentally deleted most of those, but I saved a few. It’s somewhat hit-and-miss:

|

|

|

|

Then I tried a large range of stylistic variations on the same simple prompt “a red and violet jungle” (the success with the skyline above suggested that some of the style prompts might work on Craiyon much as they do on MidJourney):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

I tried a few other subject prompts with some of these style prompts:

|

|

|

|

A few more science-fiction inspired tries with Craiyon:

|

|

|

|

|

|

And then I tried an interesting experiment with fake-but-plausible English words. What would Craiyon guess they meant?

|

Near the end of the month, MidJourney updated their algorithm. The new, third version seems to do better with faces, so I tried a few prompts to take advantage of this:

|

|

|

|

|

|

|

|

I tried doing some compositing in GIMP to get a ‘best of both worlds’ version of the pilot prompt:

Not sure which background I liked better:

And finally, I retried some of the more successful prompts from the version 2 experiments, but using version 3 this time. The spaceport seems like an improvement, but I think I liked the version 2 “clothing market” results better.

|

|

|

|

And that took me to the end of the month. I did a few more experiments in August, which I’ll include in the next summary.