One of the line items on the budget for this Kickstarter is to build a “render cluster”. You might be wondering what that is, and why we might need one.

We’ve thought about several options for handling rendering from Blender. One is to simply rely on our desktop workstations to do the rendering — but that would be very, very slow, even if my system weren’t several years out of date. We could also run Blender network rendering on several different desktop computers, spreading out the load. We could even use the publicly-available renderfarm.fi cluster. However, as exciting as I think renderfarm.fi is, I feel that our using it for such a large (and ultimately commercial) project might border on abusing a public resource.

It would be far better, I think, for us to build our own rendering hardware. And then, when we’re not currently using it for production, we might consider making it available to other free-culture projects as well, to give back to the community rather than always taking. So, that’s why I’ve budgeted to build a cluster. Of course, I also wanted to do it for the least amount of money I could, and so I did a very careful cost-benefit analysis. This is what I came up with.

An Inexpensive 48-Core Rendering Cluster

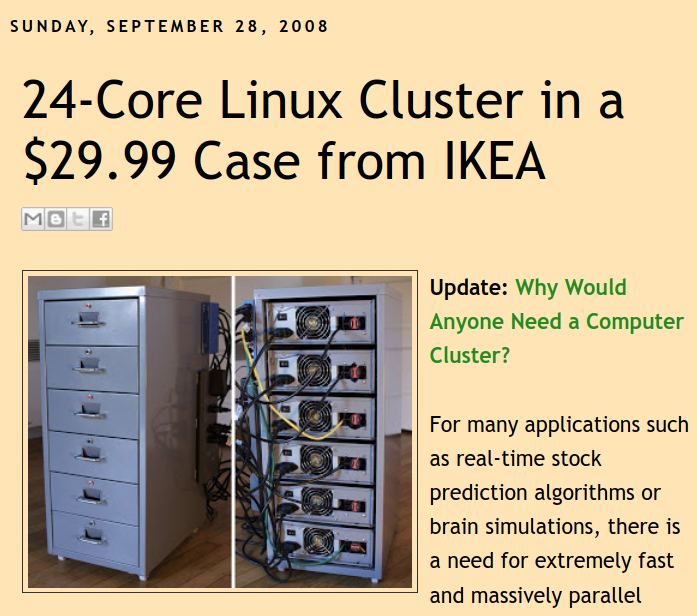

Our plan is to build what is affectionately known as an “Ikea cluster”. It’s an extraordinarily cheap way to build a high-powered computing cluster — in our case, to handle the enormous, but highly parallel task of rendering animation frames from Blender.

A home computer builder some time back noticed that a standard “Micro ATX” motherboard would fit rather nicely into the drawer slots for an Ikea “Helmer” cabinet. His cluster build looked kind of sloppy, but it was a really enormous savings over buying server case hardware — because the Helmer case cost him only about $30, which is about the price of a typical single motherboard budget-priced tower case built for the purpose.

A later builder did something similar, but he turned the motherboards 90-degrees and installed them directly into the drawers of the same cabinet, which made for a much neater case and better airflow. In both cases, they were able to build a 6-motherboard computing cluster. At the time, the high-end desktop CPU had 4-cores, so they were 24-core clusters.

Technology has moved on a bit, but the Micro-ATX is still a very standard size for desktop computer motherboards, and very affordable ones can be had. After a careful optimization study, I priced a specific set of hardware to make my computing power and cost estimates for this budget and find the best performance/price ratio possible at this time, although of course, the market is constantly fluctuating — but even if these disappear from the market, it will likely be possible to find replacements:

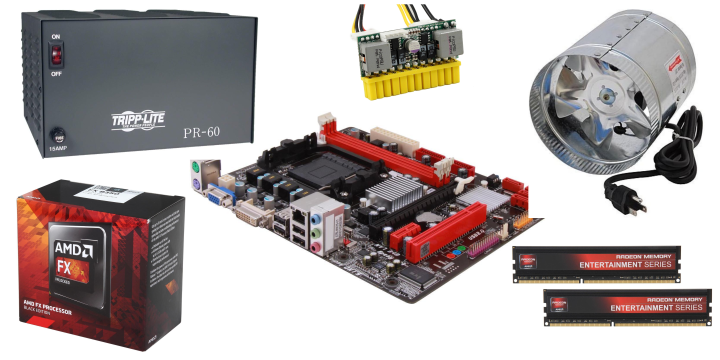

- Motherboard: BIOSTAR A960D+ AM3+ AMD 760G Micro ATX

- CPU: AMD FX-8350 Vishera 4.0GHz AM3+ 125W (8-core)

- RAM:16GB (2 x 8GB) 240-Pin DDR3 1600

There are two options for power supply. One is to put a standard switching PC power supply in each drawer, so each system is independent. This is what the previous builders did. Another alternative, though, is to simply use a DC-DC adapter on each board and provide a single high-powered supply for the whole cluster. This makes

very little difference in cost, but I think it may be more energy efficient and run cooler (because there isn’t a power supply heating up

each drawer). For pricing research, I chose these product examples:

- Per-board adapter: PicoPSU-150-XT 12V DC-DC ATX

- Main power supply: Tripp Lite PR60 DC 60 Amp 120VAC-13.8DC

Likewise, it’s possible to get little PC airflow fans for each drawer, but I’m considering the alternative of simply running a household duct-fan to draw straight from an air-conditioner into the box, pass it over all of the boards and then out the top.When you figure all of this out for a 6-motherboard system with the common power-supply and cooling system, the total cost is around $3000. Which is not that much more than an off-the-shelf commercial laptop computer.

If the project has enough additional funds, of course, we could opt to double or triple the available cluster to get double or triple speed. We’ll have to be careful about whether we’re spending too much on the hardware, though, versus how much we’re paying to artist commissions. But it is true that the hardware will make us more productive by wasting less time waiting on rendering.

About 18X Faster Performance

According to benchmark data, this cluster should be able to do Blender rendering about eighteen times faster than my current desktop. Put another way, it will turn 1-minute of render time on my current desktop system into about 3-seconds of render time. Or turn a render that takes an entire day into about an hour-and-a-half.

That’s a huge improvement if you’re having to consider whether to revise a scene which has a small error in it (and it often happens that you don’t catch an error during modeling, animation, or pre-visualization, but only after you’ve fully rendered it).

It also makes it feasible to consider final renders with more compute-intensive options in Blender. The line-rendering tool Freestyle, for example, is mainly used for rendering still images rather than animation, because it really eats CPU cycles. But as you’ve seen in my prior update, Freestyle really has the potential to improve the look of our characters.

We’ll also likely want to use more compositing, which adds multiple render passes for each render-layer. Again, this is really going to consume rendering time.

Fortunately, rendering an animated film is an extremely parallel task, so that adding extra cores really does speed things up — pretty much linearly. That’s because each frame is really a separate rendering job (there are not many things that can be shared between frames in 3D rendering), and you’re having to render thousands and thousands of frames. So, you’re never likely to have more CPUs than frames, and thus the speed-up will be close to linear with the number of CPU cores you can put on the task.

Putting it Online

Of course, this only really solves the problem for me. In order for other project members to make any use of the cluster, it will be necessary for them to upload files onto it and run rendering processes there. That means we have to provide some kind of access to the internet.

Now we could try to put our Ikea cluster into a data center — but the rental rates on a large enough cabinet would wipe out all of the savings of building this cheap system. It would actually be cheaper to spend on much more expensive rackmount hardware and just rent a 1U or 2U space at a data center in Dallas. Cheaper — but not cheap by a long shot (and yes, I also costed such a 1U or 2U data center solution, but it was not as cost-effective, so I’m not going to explain it here).

There is an alternative, though, which is to simply upgrade the internet service to our project site (i.e. my office), and hook the cluster up to that. Serving directly to the Internet wouldn’t really work for us, but we don’t really have to do that. We currently run a virtual private server (VPS) host for our website, so we have services available to the Internet and can install our own packages as needed. We currently run several free software server packages on this site, including Plone, Mediawiki, Trac, and Subversion. We can install additional software as needed, because while the site is technically shared with other hosting, it looks and acts just like a dedicated machine from our point of view (except for performance limits, obviously).

We can, therefore, use that site as a go-between to share information with our on-site cluster. To do that, though, would require an upgrade. Our site service is asymmetric — we can download data very quickly, but uploading is much slower (most client-side internet installations are like this). It’s not really designed to support servers.

However, for less than what 1U data center hosting would cost us, we can upgrade to a service that will give us at least 2 Mbps upload speeds. That’s still a potential bottleneck, but as long as we are just using it to synchronize data with our public server, it’s workable.

And that’s the currently-proposed solution. Obviously, there are several factors here which are subject to change, and we’ll re-evaluate whether we’ve really got the most cost-effective solution we can find. But the general plan should be the same.

And the Helmer case? Well, the local Ikea does indeed have them in stock ($40 at last check). I took a picture!