HIGHLIGHTS:

November was honestly a pretty rough month for me. Not much production work accomplished, but lots and lots of IT learning and server IT work. It also involved household wiring and plumbing maintenance crises, which I won’t discuss here, but which left me pretty exhausted and exasperated.

No summary video for this month, because there isn’t enough of it — development was non-existant and the production section is only a few seconds, which I will merge into the upcoming December summary.

PRODUCTION:

The only thing I logged in November was about a half-hour at the end of the month, to add footage from the 2013 “Teaser Trailer” into the Soyuz interior shots from the launch sequence (LA) as animation reference. I used this to animate in December.

SERVER IT:

Attempting to migrate the project server from our present Digital Ocean cloud service to a physical server, in preparation for moving into a colocation has been a humbling and frustrating experience, and I am still not there yet.

Initially, I had to confront my ignorance of basic computer networking, which I’ve generally managed to get by on by copying basic recipes to set up my LAN, but this required me to actually understand what I was doing!

So, I spent a good part of this month on straight-up training on basic networking, using some online video class resources. Specifically:

https://yewtu.be/watch?v=qiQR5rTSshw

https://www.youtube.com/watch?v=qiQR5rTSshw

I also continued watching a series of videos on the Proxmox virtualization environment, which I recommend:

https://linux.video/pve-course

https://www.youtube.com/playlist?list=PLT98CRl2KxKHnlbYhtABg6cF50bYa8Ulo

Even after all of this training time, I still struggled pretty hard to get my Proxmox VE set up the way I wanted on the Dell PowerEdge R720 that I bought for colocation.

And then I moved on to migrating my YunoHost server environment from the cloud server to the PowerEdge. Problem arose, however, because some of the YunoHost app restore scripts do not appear to work correctly, namely:

- PeerTube

- Gitea

- Nextcloud

Although the backups appear to have all the relevant data in them, the script does not restore them onto the server: application directories that should be filled with app data remain empty. This problem remains unresolved in December.

Between these problems and a year-end money crunch, the migration has been stalled yet again.

WORKSTATION IT:

On my production workstation machine, Sintel, I installed additional versions of Blender to try to keep up to date. I now have working copies of Blender 2.71, 2.79b, 2.83LTS, 2.93LTS, 3.3.0, 3.6.5LTS, and 4.0.1.

I have launchers set up for each of those, with two for 2.79: one with and the other without the “enablenewdepsgraph” command-line flag set.

I also installed these Blender packages and missing dependencies on the host operating system on the new PowerEdge server, Narya. These will allow me to render shots on-site while I still have the server here (and later I can run these jobs from an SSH or VPN tunnel when the server is moved to the data center.

DOCUMENTATION:

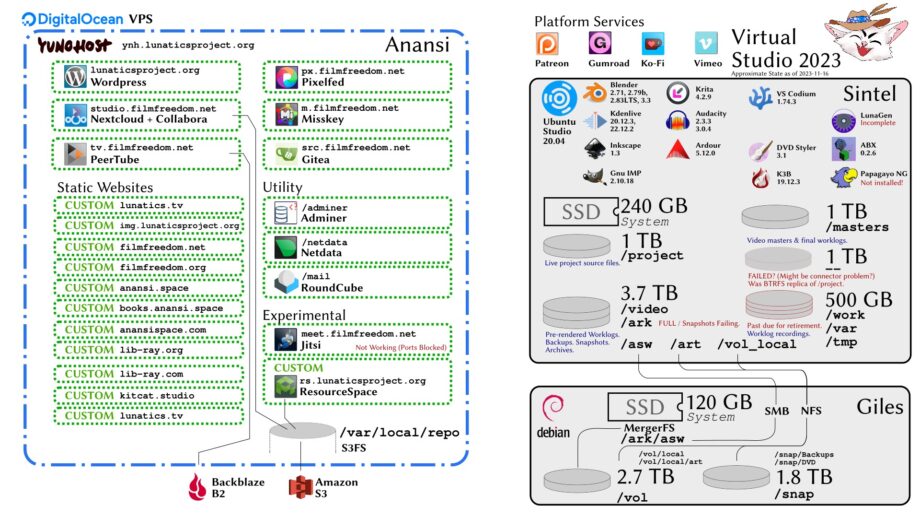

I also defined and drafted a plan drawing of the existing, 2023 Virtual Studio configuration (cloud-based) and the planned 2024 Virtual Studio (colocated).

Just drawing this in Inkscape took quite awhile. There was a lot of detail to work out. And then I wrote that up in Patreon.

Virtual Studio for 2024

I’m getting close to setting up the new colocation server. Currently struggling to understand computer-networking issues in Proxmox and how to set up a VPN for maintenance access.

Current Virtual Studio in 2023

I decided to document my current, cloud-based, setup, and then plan out what I’m hoping to start out with in 2024.

The cloud-based configuration is basically just YunoHost web apps installed in a VPS from Digital Ocean. It works… okay:

But there are some problems. In order to get these apps to all run without crashing, I had to upscale the VPS to the point where it’s costing me about $70/month.

And for that to work, I needed to move all the data-intensive parts onto remote object storage.

I originally used Amazon S3, but found that some of the apps could wind up costing a lot, due to data egress charges — I got hit with $50 bills from AWS for a couple of months in 2022, before I shut down the problem area (which was Gitea, handling LFS requests, due to search engines hitting my production source repositories).

I solved that both by updating my ‘robots.txt’ file to tell the search bots to leave my repo contents alone, and by moving the object storage for Gitea to Backblaze B2, which has much lower egress fees.

I also moved PeerTube to Backblaze B2, although it should be noted that many of the videos are still on Amazon S3, because PeerTube doesn’t migrate existing videos. So, it turns out, I have videos in local storage, on Amazon S3, and Backblaze B2 at the moment!

Fortunately, a feature in PeerTube v6 will fix this problem by providing a better storage migration script.

My workstation is pretty good, but there are some maintenance and organization issues that are really pressing. My snapshot backup system has crashed, due to inadequate storage on my archive drive.

I also have one probably failed hard drive and one that is still going fine, but has 11 years of accumulated run time. It’s getting old enough that I really should retire it before the bearing fails or something (I currently use it for “scratch” data to minimize the impact of a failure).

And, I’m kind of stranded on Ubuntu Studio 20.04 if I don’t want to embrace Snap packages, and really, really don’t like them. But Ubuntu apparently does. As time passes, it’s getting more uncomfortable to stick with this older release.

New Virtual Studio for 2024

Which brings me to the new studio arrangement that I’m working on. I got a quote for datacenter colocation that is around $70 to $80 (there may be some surcharge for extra power consumption, depending on how I use it).

So, the transition will be very nearly zero-change in cost. But the capacity will increase enormously (more than 10X available RAM, CPU, and disk space):

I plan to leave the static websites on Digital Ocean, which past experience tells me I can run on a minimum-size droplet — which is about $6/month now. Although YunoHost may seem like overkill for this, it very conveniently automates log management, certificate-renewals, and backups. And, of course, Digital Ocean droplets have excellent availability, which is something I’ll probably be sacrificing by going to a self-managed physical web server.

That shouldn’t be too much of a problem for the studio server, though, as long as I have some kind of website that stays up (I plan to implement some kind of “soft failure” mechanism, like displaying a message that the studio server is down, so some things won’t work — this will mainly affect embedded PeerTube videos).

The active YunoHost web applications will then run in a large virtual machine hosted in Proxmox on the server I’m setting up. This gives me the flexibility to create additional VMs if necessary to accommodate future software (for example, I might set one up to manage Docker images, which may be available for software that doesn’t have a YunoHost “app” installer).

I also can run Blender on the host machine (or perhaps in another VM or LXC container in the future), so I can take advantage of this machine for rendering.

I plan to add some additional storage drives at home; retire that old hard drive; and reorganize some of the other partitions. Since I’ve been keeping screencast worklogs for four solid years now, I figure I probably should dedicate some space to those and consolidate them onto one partition.

And I’m going to move from Ubuntu Studio to AV Linux on my workstation. They are similar distributions, in terms of applications and the desktop environment (XFCE), but AV Linux continues with DEB packages, which is more in line with my preferences.

This chart shows an additional server at home — “Atoz” (named after the librarian on Sarpeidon in Star Trek’s “All Our Yesterdays”).

I had looked into ways to upgrade “Giles” to be able to hot-swap the Dell drive sleds from the datacenter server (“Narya”), but there doesn’t seem to be any easy way. Taking the drives out of the sleds is contrary to the whole point of the hot-swap sleds, so this seemed like a problem.

In the end, I decided the best option was probably to acquire another inexpensive Dell server, and use that at home. This also gives me the opportunity to run some YunoHost apps on our LAN and also to use that machine for rendering.

I’m uncertain whether I’ll actually set up Atoz by January, but we’ll see. If not, I’ll likely add it later.

Not too many other things will change. Of course, I’ll be upgrading to newer versions of a lot of the software, both on the server and on my workstation.

Virtual Studio as a Deliverable

I’m not exactly sure what I want to do with this platform, after I finish setting it up. It’s not really an original product. It’s more of a deployment, based on AV Linux and YunoHost. But I may be able to document it or even provide configuration management files for it (an Ansible playbook, perhaps).

There are a few apps that I’m planning to do development work on, and/or which need to be packaged better. I would like to set a goal for 2024 of creating DEB packages for those, which will be a whole new adventure for me.

Another possibility is to offer multimedia project hosting. All of these apps allow for many users, so I could probably create an inexpensive hosting package based on that. Which might make the server pay for itself. I have a little anxiety about running that, as inexperienced as I am. But it’s a possibility.

Remaining Problems

The reason I’m not moved into the datacenter yet is mostly that I have to solve a couple of technical networking problems. The first is how to correctly configure networking on the host and the VMs in Proxmox, as well as a pfSense-based VPN router:

- Host NFS on private virtual network to share the ‘repo’ drive.

- Run Blender on the host for rendering to and from ‘repo’ drive.

- Connect iDRAC and Proxmox to a VPN for maintenance access from home.

And then I’ll need to use OpenVPN to set up a connection from my workstation at home for maintenance. I decided against trying to set up a site-to-site VPN because my home ISP doesn’t do fixed IP, and I don’t want to mess with Dynamic DNS to get around this. Since the VPN is just for server maintenance access, I probably won’t be using it often, so I want to keep this simple.

And I’ve been waffling between buying an actual physical router for the VPN or renting a VPS in the datacenter’s cloud for it — which they did offer (a cash now or cash monthly situation).

I don’t think this is objectively difficult, but computer networking has always been a peripheral concern for me. In the past, I mostly just memorized a few things I had to do to get it working. This arrangement is requires me to actually understand the /etc/network/interfaces files! And I’m still not there, yet.

But, assuming I can work through that, I should be no more than a week or so away from the move.

[SPOILER: it did NOT happen in a week. Sorry, YunoHost app restores also gave me problems].

Throwback Timelapse: October 2019

I started logging my workdays with a screencast in 2019, but didn’t come up with the “monthly topical summaries” until 2021, so I didn’t make any for 2019 back then. I decided to start making the 2019 topical summaries a month at a time, after the current ones, and add them to my retro-actively created “Monthly Summary” posts on my Production Log. So I just completed:

October 2019 Monthly Summary (Production Log)

featuring:

This covers most of the set dressing for the “Central Universal Market” set in Baikonur, which is the open-air market near the train station where Georgiana buys the decorative box she takes with her (which becomes a plot point in episode 4). The set appears in shots TB-2-D & E in the “Touring Baikonur” sequence in the pilot.

This month also saw some work I did for a local community theater troupe, “Southwest Reprise Theatre” in Fort Worth, TX: there’s a bit of my editing a video of their play “Alice in Wonderland” and also almost the entire production of “Devastation of the Xmas”, which I prepared for their Christmas play that year.

And finally, this was when I first started writing the Ink/Paint Compositing feature for Anansi Blender Extensions (ABX), as well as importing it into my Eclipse IDE workspace.