My feed on Mastodon has been filling up with knee-jerk reactions to Microsoft’s new “Recall” software for Windows 11. In brief, this feature will capture your screen (and other input?) periodically, and use this to inform an AI-based search to help you keep track of your past activities.

There are many issues that this raises, clearly. But a fair number of people seem to have concluded that screenlogging as a practice is useless, harmful, or both. I have neither direct knowledge nor interest in whatever Microsoft is doing specifically with “Recall”, but I have been doing screencast logs for over five years now, and it has been a valuable practice for me.

So I think I’m qualified to share an opinion about why you might want to do something like this, and how to do it safely. Specifically, I want to explain my own process, where I see it benefiting, and what pitfalls there are to avoid.

Why or Why Not?

As simple pros and cons:

Pros:

- Track productivity/morale

- Self-management/feedback

- Process documentation

- Data for planning/time-projections

Cons:

- Security – passwords!

- Privacy – social media, etc.

- Overhead time.

- Disk space.

Context & History

For anyone not a regular reader of my Production Log, the project I’ve been working on for the last decade of my life is an open-movie animation project, “Lunatics!” It’s not my only activity, but it’s what I mostly talk about in public. Much of the objective of this project is to explore new free/open-source media-production and studio-management software. I spend a lot of my time learning and installing new software as well as developing workflow around it. A smaller amount on actual software development. And I also have to do a lot of maintenance tasks on web and desktop authoring applications.

Lunatics Project has been a mixture of successes and failures. It failed to earn big crowdfunding support and launch as a series the way I had hoped to do, but on the other hand, I have continued it for a long time, got quite a bit of help, and still plan to finish my pilot episode.

It has been a very long, hard slog, with very little in the way of tangible rewards though. This is the classic recipe for burnout, and that actually led to some medical issues for me in 2018, leading to some lifestyle changes and a lot of personal reflection.

I had become very concerned that I wasn’t reaching my goals, that I wasn’t working enough towards them, and that I might be wasting a lot of my time on social media. I wasn’t tracking any of that, objectively, so I had only my own perception to guide me. And that can be treacherous — it’s easy to be either too forgiving or too critical when dealing with yourself.

A contractor working for me on the project had suggested a productivity management tool which screen captured and summarized time on the contractor’s computer to report to a manager for remote work. My immediate reaction to this was horror, and I will say for the record: we did NOT do that. However, it did put the seed of the idea in my head as a self-management tool.

I discovered that I could set Vokoscreen to record at one frame-per-second, which would use up relatively little resources, and yet be sufficient granularity for logging. It could then be sped up 30X with no frames lost, and I found that I could usually follow what was going on at that rate. If you then double the speed to 60X, it also makes for a very simple time-conversion: 1 sec = 1 min / 1 min = 1 hour.

I used Kdenlive to edit these, since that’s what I was already using for production work on Lunatics.

So, when 2019 rolled around, I decided to try it out, and, starting on Jan 1, 2019, I started screencasting my whole workday on the computer, then editing it down to the actual work bits afterwards. I started out at 20X, initially, because at the time, that was the maximum that Kdenlive could do in one pass.

I’ve made many refinements since then to software and practice, but I have not stopped since, and on Jan 1, 2024, I passed the five year mark.

Benefits I Found

Initially, my goal was “self-management”: I wanted to track how much time I was actually putting in on my project, versus how much I was surfing Facebook or some other less productive activity. It is good for this if you need it, and sometimes I do.

Over time, though, I found that, first of all, my self-assessment wasn’t remotely fair: I was putting in lots of work time. But what often happened is that, once I solved a problem, I had a tendency to forget the problem ever existed. At which point, it became difficult to account for what I had spent my time on. My reaction to past logs was often “Wow! I completely forgot I had to do all that!”

Possibly this is some kind of ADHD “executive dysfunction” issue for me. Or perhaps it’s completely normal. I don’t know. It does make it easier to focus on the next problem, but it also makes it easy to discredit your own work, which can be very demoralizing.

So I found that having this tangible proof of my work was a significant morale boost, in the absence of third party acclaim. It’s a little like word-counts for writers and passing unit tests for programmers. It’s good to give yourself a more tangible reward for work done. This helps me to avoid burnout, and it’s worth it just for that.

In the same vein, whether I should chalk it up to ADHD or Autistic Spectrum personality, or whatever, I often do my best work in a “flow state“, in which time just seems to vanish. Writing, programming, video editing, and other creative activities often just run into a blur and I’m completely unaware of the passage of time. These are, obviously, the hardest things to take good notes for, because note-taking distracts you from your work. But screencasting like this does not: it’s taking notes for you.

Also, if you’re working experimentally, and you don’t know whether what you’re doing is going to work, it’s very tedious to take exact notes on what you did each time (even though, as a scientist, that’s what you’re supposed to do!), so you wind up either having to repeat everything once you figure it out, or if you’re feeling pressed for time, just skipping the notes and getting on with things.

This means that I often had very poor notes on the most critical things I was working on. And if, like me, you find yourself moving from one domain (say, server administration) to a completely different one (say, Blender animation and modeling) over a period of months, it becomes extremely common to completely forget how something worked, and then have to re-learn it all over again six months or a year later when you come back to it.

And that is where you really appreciate having that screenlogger looking over your shoulder the whole time. Because now you can go back to the log and watch yourself doing the thing you’ve forgotten how to do. This is extremely valuable — it can save many days of relearning effort. And there is a great feeling of security knowing that this record is being kept.

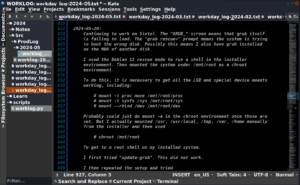

That said, this does NOT eliminate the need to take text notes, for which I use Kate (a text editor). Like so:

Each dated entry serves as a kind of index. I can use grep on the command line to search for keywords, then look up the entries, find the dates, and, later, pull up the video logs for that day if I want more detail than what I wrote down.

Finally, of course, it’s much easier to make projections on future work with this kind of information available. It’s still not easy, but it is easier.

Costs, Risks, and Problems

Now, of course, there is no such thing as a perfect solution. Video logging is probably not for everyone.

My process, which I’ll describe below, is time-consuming. I spend several days a month just editing and annotating worklogs. That’s a very tangible cost. But like the time spent writing unit tests or cleaning your work space, it is time that you usually will get back later. But only if your process is streamlined enough to keep this from becoming overwhelming — I’ll discuss how I dealt with that in the next section on “Technique”.

This is an area where there is potential for artificial intelligence to speed things up: the AI could try to identify what you’re working on, which part of the screen is most interesting, and also whether you are actually doing anything. It could OCR text on the screen to write some kind of textual notes without you having to take those notes.

I’m frankly skeptical about how good of a job the AI could do, but I definitely see there is some potential there.

Security and Privacy

The biggest issue (which features prominently in the knee-jerk opposition I observed to the Microsoft product) has to be that screen-capture will often catch sensitive information. If you think it likely that your logs will be seen by other people, then you may be setting yourself up to have to do some redacting.

Most command-line utilities don’t show passwords as you are typing them. Most web applications will hide the password characters, although they may show a little dot for each character, showing how long your password is. But some tools will just let it all hang out: Ansible is particularly bad about this!

I was using Ansible extensively for server management in 2019-2021, and by design, as it runs, it shows you the data going from it to the server and back — all in plain text. This is true, even if you’ve taken care to put all the sensitive passwords and other secrets into an encrypted “vault” file. That makes sure they aren’t stored plaintext on your disk. But the terminal output is a whole other issue!

Obviously, you might also capture private text conversations with friends or family that would otherwise be expected to be confidential. Or you might be capture those hot takes on social media. And the list of screen activities you might not want to be preserving for the ages goes on.

You need to consider all of these things when deciding on a workflow for screenlogging, if you’re going to do that.

Technique

I’ve revised my process a lot since I started in 2019, but I’m not going to bore you with all the intermediate steps. I’ll just describe my 2024 worklog process.

First of all, my deskop/workstation has two video monitors: one 43-in UHD (3840×2160) monitor I use for most work, and a 24-in HD (1920×1080) monitor. The smaller one has much more precise color representation — I originally referred to it as my “color check” monitor, but the larger one is easier to work on. Lunatics is mastered at 1920×1080, so it’s possible to have a full-size, full-resolution frame in the canvas/viewport space of Inkscape, Blender, or Kdenlive, and still have plenty of room for the UI widgets around it.When assembled as a single combined desktop, this results in a 5760×2160 frame, with a cutout-notch on the upper right. Here’s a screen capture (reduced a bit):

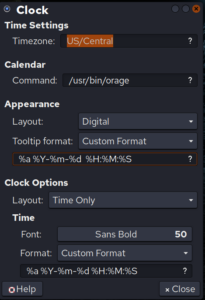

This is using the XFCE 4 desktop, which is standard in “Ubuntu Studio” and “AV Linux” distributions. Notice that I have a prominent time and date display on my desktop. While I do appreciate the clock, the real reason is for the log: this display becomes a time index in the resulting video. It’s more useful than a frame counter for editing.

This is the XFCE 4 “Clock” applet, linked to the “Orage” calendar app. Here’s the configuration: You’ll note that I’m using custom formatting to have the time and date stamp written out this way, and in a large font. This makes it so that the time index can be easily read, even after the image has been reduced in size for the log.

You’ll note that I’m using custom formatting to have the time and date stamp written out this way, and in a large font. This makes it so that the time index can be easily read, even after the image has been reduced in size for the log.

Now, this full screen is very large, and it’s desirable not to have to save all of those pixels for the long term. So I actually do this in stages.

Capture

Though I started with Vokoscreen, and you can still do it that way, it’s easy to make errors if you are setting it up daily, so it’s nice to replace this with a special-purpose script. I was able to check the parameters that Vokoscreen was sending to FFMPEG to do the recording, and just copied those over into a Python script I wrote to capture my logs. It’s pretty simple:

#!/usr/bin/env python3 """ Run ffmpeg to record worklog video from both displays and store in /worklog/capture. """ WORKLOG_DIR = '/worklog/capture' #MONITOR_TYPE = 'single1920x1080' MONITOR_TYPE = 'dual5760x2160' import subprocess, datetime, os # Units K = 1024 M = K*K G = K*K*K # Get the available space s = os.statvfs('.') free_space = (s.f_bsize * s.f_bfree * 1.0)/G if 1.0 < free_space < 10.0: print("WARNING: Available Space < 10 GiB!") elif 0.001 < free_space < 1.0: print("WARNING: SPACE VERY LOW!") elif free_space < 0.001: print("ERROR: NO SPACE FOR WORKLOG!") exit(1) else: print("%5.1f GiB Available Space." % free_space) def now(): return datetime.datetime.now().strftime('%Y-%m-%d_%H-%M-%S') ffcmds = {'dual5760x2160': ['/usr/bin/ffmpeg', '-report', '-loglevel', 'quiet', '-f', 'x11grab', '-draw_mouse', '1', '-framerate', '1', '-video_size', '5760x2160', '-i', ':0.0+0,0', '-pix_fmt', 'yuv420p', '-c:v', 'libx264', '-preset', 'veryfast', '-q:v', '1', '-s', '5760x2160', '-f', 'matroska'], 'single1920x1080': ['/usr/bin/ffmpeg', '-report', '-loglevel', 'quiet', '-f', 'x11grab', '-draw_mouse', '1', '-framerate', '1', '-video_size', '1920x1080', '-i', ':0.0+0,0', '-pix_fmt', 'yuv420p', '-c:v', 'libx264', '-preset', 'veryfast', '-q:v', '1', '-s', '1920x1080', '-f', 'matroska'] } ffcmd = ffcmds[MONITOR_TYPE] timestamp = now() ffp = subprocess.Popen(ffcmd + [os.path.join(WORKLOG_DIR, 'worklog-'+timestamp+'.mkv')], stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.STDOUT) print("Worklog recording for %s" % timestamp) print("Press <enter> to stop recording.") input() ffp.communicate('q'.encode()) print("Worklog stopped at %s" % now())

You’ll note that this script doesn’t take arguments. I’ve included them as constants at the top of the file. That’s because I don’t want to have to remember any arguments for a routine daily practice like this. I want it preset so it just runs when I call it, and already has things set up correctly. On the other hand, so that I can use it on another machine with just the one 1920×1080 monitor, I do have two profiles defined — obviously you could add more.

At this stage, the log is full-size and real-time. The FFMPEG command does not attempt to speed up the video, though I suspect it would be possible to record it at 30X speed to save a step.

Non-Computer Stuff

Not everything I want to log is done on the computer. I also do repair work and other tasks I want to keep a record of. For these, I’ve adopted the habit of snapping pictures with my smartphone, and keeping those still images. Occasionally I’ve set up and recorded video, but it’s usually not worth that much trouble. I upload these to my computer in daily batches.

Pre-Render/ Raw Edit

Originally, I had to run the video through Kdenlive twice to get a 30X speed-up, because 20X was the maximum setting on the “Speed” effect. Since then, I’ve moved to a later version; speed has become a separate setting (not an “effect”); and seems to be basically unlimited, so this isn’t strictly necessary. But there are other reasons to make this intermediate:

Focus

It’s often the case that I only need to log the work on one monitor. Often, for example, I have Kate open on the smaller monitor for note-taking, and I don’t need to capture that. So I have a custom “Edge Crop” effect in Kdenlive set up to cut the log down to “Disp1” or “Disp2” to determine which monitor (display) I want to log.

You may notice that the time index is only available on the large monitor. So, if I’m working on the smaller display, I’ll often do an overlay at this point, “Timecode Inset”. This shrinks and shifts the display 2 image so it can be overlaid on the display 1 image to get the action on display 2, but with the timecode included.

And occasionally, I actually do want both monitors to be logged. Usually, it’s desirable to overlay both on the screen, so I have another custom effect stack for that: it puts the 2nd monitor in the upper left corner of the 1st monitor capture. This usually covers up a part of the screen I’m not really using.

And it’s possible to do something custom if those prearranged effects stacks don’t cover my needs.

Scale/Speed

The FFMPEG output is 1 fps, but not accelerated. So it’s necessary to apply a speed effect (3000%) to pull this up to 30fps, which presents the exact same frames, but faster.

Also, the original 5760×2160 frame size is cumbersome. I generally want to shrink the output down to 1920×1080 for more manageable playback (and editing).

Walkaways

I do not always turn off logging when I walk away from the computer. It’s not necessary if I’m just taking a bathroom break or getting another cup of tea. And occasionally, I just forget and go off for hours, leaving the logger recording lots of identical frames. And at least a few times, I have fallen asleep in my chair!

There are also some times when I left the logger going while some long process was running and reporting data to the screen (Rsync jobs, Blender rendering, etc). I don’t necessarily want to keep all of that.

It would be very tedious to sit through all of that when reviewing, or even when editing.

So, in practice, what I do is to speed the capture up not by 3000% (30X), but by 18000% (180X). Any more than this, and my Kdenlive can’t keep up with the frame rate anyway. Then I can quickly move through the timeline to find where the pauses are, and edit them out. After I’ve found and cropped all the clips as desired, I change them back to 30X and put them together.

Be aware: there is a little bit of bugginess in Kdenlive’s “Change Speed” tool. I have found that when I pop the 180X clips back down to 30X, there are small shifts in start and end points. I’m not sure what all the factors are that affect this, but the practical solution is to avoid cutting too closely — leave some footage in at beginning and end, which can be cut out in the next step.

Annotation/Daily Logs

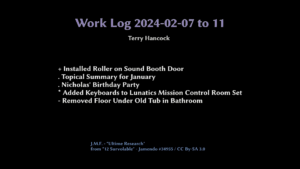

Although I keep a text file for the notes of each month, it’s also nice to include the text as titlecards in my video. I also want an up-front card with the time, date, and a few highlights. Also, mostly for fun, I include music from my large free-licensed music library that I collected for Lunatics Project soundtracks, and I want to include a credit for that, of course:

This has a very quick bullet-list of things I got done during that period. I picked this one, because it shows a number of the conventions I’ve adopted: the title at the top includes a period of a few days, which have been lumped together, because only the last one has a video screencast. The others were “offline days”, which had photos and text only. The bullets are different characters, according to a mnemonic I came up with: “?” research, “+” added/built something, “-” tore down/removed something, “.” routine/personal things, “*” project work, “~” continuation of a multi-day project.

At the bottom is a music credit with artist, track, album, catalog number, and license.

Including the music also serves to keep the tracks in my memory, so I can recall them when I’m editing soundtracks.

This stage also gives me a second chance to cut out dead space in the log. I also usually speed up boring/passive parts like watching an unattended Rsync copy or Blender render finish by an additional 10X (so they’re 300X realtime).

I don’t have many of my daily worklogs online for security reasons, but I have this sample from 2019:

Producing these daily worklogs, from capture to daily log video takes about 1 day per week (although I often put it off and then have to spend 2-3 days catching up). So that’s about 17% of my work time. A significant cost!

But this has to be weighed against how much time it saves. This can be tricky to estimate. But prior to adopting this practice, it was not uncommon to have a single incident of forgotten process to require as much as 2-4 weeks of re-learning and/or re-engineering a new solution to make up for what I’d forgotten. And since I adopted it, I have usually at least one or two events a year where the documentation value of these logs has paid off. So, while I can’t put definite numbers on it, I’m convinced this is saving more time than it costs.

Topical & Monthly Summaries

The daily logs are presented at 30X speed, which is slow enough to follow along. And they are strictly chronological (or nearly — the offline photos are typically after all the video, though I may actually have switched between tasks during the day). These are good for detailed documentation purposes.

However, for time management, it’s a lot better to use 60X, so that minutes become seconds and hours become minutes. It’s also useful to collect tasks by topic. I call this my “monthly topical summary”. I can then make measurements on the Kdenlive timeline by dropping a “color clip”, stretching it to match the collection of related clips, and then reading off the duration of the clip. I record these as a “Time Breakdown” at the end of each month’s text notes, and include this information in my monthly reports on this Production Log for people following the project.

Note that this practical use is achieved in the Kdenlive editor. I don’t strictly have to render this “Topical Summary” video. But at that point, why not go ahead?

Much of that time is pretty boring and/or includes difficult-to-redact work like doing my taxes or configuring a web application server. So I cut out the “good parts version” with Blender and Video stuff, and these are what you can find as “Monthly Timelapses” on my PeerTube, and included in the monthly summary posts here on my Production Log. Again, with music — usually drawn from the music I used on the dailies for that month, and occasionally thematic to whatever I was doing or how I was feeling.

Annual Summaries

From 2021-2023, I also cut together a video from the topical summaries, collecting all the most significant work I did during that year, to include with my “annual report” archive. This is mostly just for fun.

Disposal

I delete the “capture” videos as soon as I’m sure I’ve got what I need from them. I keep the “raw” video edits for a month or two, just in case the daily edit missed something that I want to fix, then delete them. And then I keep the “daily”, “topical”, “monthly”, and “annual” videos permanently, archived on M-Disc as part of my “annual archive” for each year. Some of the dailies are marked “CONFIDENTIAL” because of passwords on screen or something else I want to make sure doesn’t become public (but want to keep for my own records).

I wind up with about 50-100 GB of video per year this way. So, with the extra material, my annual archives take up about 4-6 M-Discs.

Retrieval

For ease of reference, I keep past logs on a live storage hard drive where I can get to them easily. So far, this includes all of them, although I probably will drop the earlier ones eventually. I can locate the required logs by using grep on the text log files (one for each month of the year), looking for keywords. This will tell me the date range I need to check for video logs. And then I can just queue those up in VLC for viewing. Typically, I’ll be able to spool quickly to the relevant part of the video, and watch from there.

Improvements?

Can this process be improved upon? Almost certainly. A range of technologies which tend to get lumped under the heading of “artificial intelligence” very well could be applied to it:

Automatic Direction

Certainly, by listening to mouse and keyboard events or even just analyzing the screen, it should be possible to figure out where on my large two-monitor workspace I’m actually working. Then it could automatically handle the cropping decisions that I make when editing my video captures. That could save a lot of time.

Automatic Indexing

By using OCR or other character recognition technologies, the AI could try to keep track of headings and other text on screen that might give a clue what I’m working on. It could also record the applications that are open at any given time for similar reasons. It could detect patterns in this usage, and try to collect an index of keywords for me to search for. I’m more skeptical of this working, but the devil is in the details.

I suspect, based on the press-releases, that Microsoft “Recall” is trying to do something like this.

Dangers

So, based on this experience and what little I know of the Microsoft “Recall” software, what do I think?

I do think this kind of technology will be useful, and the AI incorporated will likely be convenient — making it practical for people who don’t want to spend the amount of time I have on logging workflow. This will likely open up some of the benefits for people who are less motivated than I am to track all their work on the computer.

The problem with Microsoft’s “Recall” software is the problem with ALL of their software: it’s a huge security risk to let a perversely-motivated corporate entity do what it likes with your most sensitive data, particularly when you have no means of oversight or verification of what they tell you they are going to do with it. And I mean this in the jargon sense: the company has a “perverse incentive” to betray your trust. And since the code is proprietary, there is no effective means of holding them to their word.

And Microsoft (like Apple and Google and Adobe) has a long history of abusing this kind of user trust. There is absolutely no reason to believe they will behave more responsibly in this case.

Also, this certainly sounds like a “foot in the door”, “slippery slope” move: they promise to keep this data and keep it locally on your machine for security reasons. This assuages your concerns so that you may become dependent on the tool. And from there, it is an extremely short step to “storing your data in the cloud”. And from there, a short step to “we will use AI scans of your data to help you out” and “we will train our AI on your private data”. Past actions from corporate software entities strongly suggest this will happen.

And, as I have noted: even with everything transparently designed and stored locally, using all open-source tools, there are security risks to having screen capture logs. It is a kind of surveillance technology, even if only to surveil yourself. I am really only willing to take this risk because I control all of the components in my workflow.

In a corporate setting, of course, this also opens the door for other people to have access to your logs. As I already mentioned — software like this exists for bosses to micro-manage their staff’s computer time. Widespread use of a tool like “Recall” invites the possibility of management tools that collect these AI indexes to create “performance reviews” or to catch violations of workplace computer use policies. There are dystopian possibilities here!

For myself, I will certainly be sticking to my manual screenlogging workflow.

In addition to the security dangers, the process itself is part of the payoff for doing it: when I am editing daily worklogs, I am also reviewing and evaluating the work that I logged, which was a major reason for recording them in the first place. After that, it isn’t strictly necessary to watch them again, unless I need to consult them for documentation purposes. So this might be another case of the AI automating something I don’t really want to automate.